Of course, there are further possible test aspects to include, e.g. access speed of the connection, number of database records present in the database, etc. Using the graphical representation in terms of a tree, the selected aspects and their corresponding values can quickly be reviewed. Facilitated by an intuitive graphical display in the interface, the classification rules from the root to a leaf are simple https://www.globalcloudteam.com/ to understand and interpret. Input images can be numerical images, such as reflectance values of remotely sensed data, categorical images, such as a land use layer, or a combination of both. The user must first use the training samples to grow a classification tree. The second caveat is that, like neural networks, CTA is perfectly capable of learning even non-diagnostic characteristics of a class as well.

A crucial step in creating a decision tree is to find the best split of the data into two subsets. This is also used in the scikit-learn library from Python, which is often used in practice to build a Decision Tree. It’s important to keep https://www.globalcloudteam.com/glossary/classification-tree-method/ in mind the limitations of decision trees, of which the most prominent one is the tendency to overfit. With D_1 and D_2 subsets of D, 𝑝_𝑗 the probability of samples belonging to class 𝑗 at a given node, and 𝑐 the number of classes.

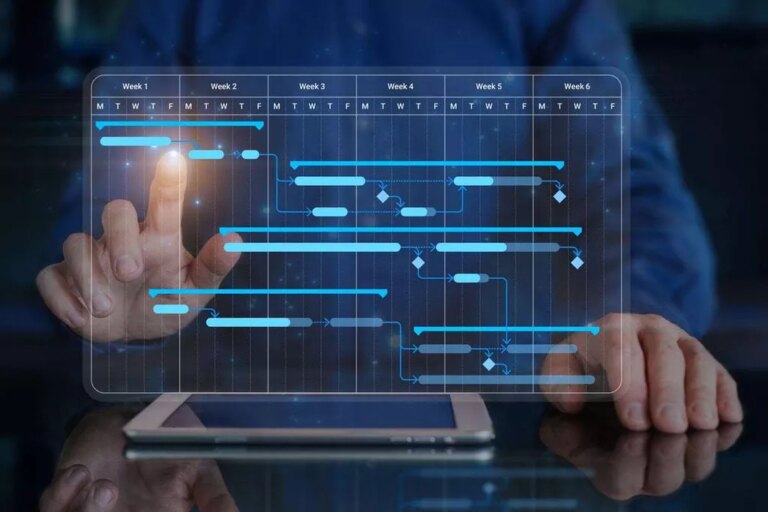

TEST DESIGN USING THE CLASSIFICATION TREE METHOD

Applying this rule to the test set yields a misclassification rate of 0.14. In general, one class may occupy several leaf nodes and occasionally no leaf node. The intuition here is that the class distributions in the two child nodes should be as different as possible and the proportion of data falling into either of the child nodes should be balanced. Next, we define the difference between the weighted impurity measure of the parent node and the two child nodes. The classification tree algorithm goes through all the candidate splits to select the best one with maximum Δi.

- Depending on the situation and knowledge of the data and decision trees, one may opt to use the positive estimate for a quick and easy solution to their problem.

- Classification trees are a hierarchical way of partitioning the space.

- In the end, the cost complexity measure comes as a penalized version of the resubstitution error rate.

- Tree-structured classifiers are constructed by repeated splits of the space X into smaller and smaller subsets, beginning with X itself.

- According to the class assignment rule, we would choose a class that dominates this leaf node, 3 in this case.

In most cases, the interpretation of results summarized in a tree is very simple. The Gini index and cross-entropy are measures of impurity—they are higher for nodes with more equal representation of different classes and lower for nodes represented largely by a single class. As a node becomes more pure, these loss measures tend toward zero. The left hand side shows the mean of neighbouring values for age.We then have the following possible splits. Recall that a regression tree maximizes the reduction in the error sum of squares at each split.

Optimal Subtrees

The error rate estimated by cross-validation using the training dataset which only contains 200 data points is also 0.30. In this case, the cross-validation did a very good job for estimating the error rate. Each of the seven lights has probability 0.1 of being in the wrong state independently. In the training data set 200 samples are generated according to the specified distribution. Δi is the difference between the impurity measure for node t and the weighted sum of the impurity measures for the right child and the left child nodes.

In the regression tree section, we discussed three methods for managing a tree’s size to balance the bias-variance tradeoff. The same three methods can be used for classification trees with slight modifications, which we cover next. For a full overview on these methods, please review the regression tree section. Boosting, like bagging, is another general approach for improving prediction results for various statistical learning methods.

3 – Estimate the Posterior Probabilities of Classes in Each Node

The splitting criterion easily generalizes to multiple classes, and any multi-way partitioning can be achieved through repeated binary splits. To choose the best splitter at a node, the algorithm considers each input field in turn. This is repeated for all fields, and the winner is chosen as the best splitter for that node.

CTE XL Professional was available on win32 and win64 systems. Combination of different classes from all classifications into test cases. Is an example of semantics-based database centered approach.

Determining Goodness of Split

This approach does not have anything to do with the impurity function. The way we choose the question, i.e., split, is to measure every split by a ‘goodness of split’ measure, which depends on the split question as well as the node to split. The ‘goodness of split’ in turn is measured by an impurity function. Since the training data set is finite, there are only finitely many thresholds c that results in a distinct division of the data points. The pool of candidate splits that we might select from involves a set Q of binary questions of the form \in A\)? Basically, we ask whether our input \(\mathbf\) belongs to a certain region, A.

\(p_L\) then becomes the relative proportion of the left child node with respect to the parent node. Where p(j | t) is the estimated posterior probability of class j given a point is in node t. This is called the impurity function or the impurity measure for node t. A stop-splitting rule, i.e., we have to know when it is appropriate to stop splitting. In an extreme case, one could ‘grow’ the tree to the extent that in every leaf node there is only a single data point. Normally \(\mathbf\) is a multidimensional Euclidean space.

Decision Tree Example:

A classification tree is composed of branches that represent attributes, while the leaves represent decisions. In use, the decision process starts at the trunk and follows the branches until a leaf is reached. The figure above illustrates a simple decision tree based on a consideration of the red and infrared reflectance of a pixel. To conduct cross validation, then, we would build the tree using the Gini index or cross-entropy for a set of hyperparameters, then pick the tree with the lowest misclassification rate on validation samples.

It is straightforward to replace the decision tree learning with other learning techniques. From our experience, decision tree learning is a good supervised learning algorithm to start with for comment analysis and text analytics in general. The database centered solutions are characterized with a database as a central hub of all the collected sensor data, and consequently all search and manipulation of sensor data are performed over the database.

Your Answer

Classification and regression trees implicitly perform feature selection. CART models are formed by picking input variables and evaluating split points on those variables until an appropriate tree is produced. A Regression tree is an algorithm where the target variable is continuous and the tree is used to predict its value. Regression trees are used when the response variable is continuous. For example, if the response variable is the temperature of the day.